Introduction to Tibero

It describes the basic concepts, processes, and directory structures of Tibero.

Tibero, which manages massive data and ensures a stable business continuity, provides the following key features required for the RDBMS environment.

Key Features

Distributed Database Link

This feature stores data in a different database instance. By using this function, a read or write operation can be performed for data in a remote database across a network.

Data Replication

This function copies all changed contents of the operating database to a standby database. This operation is done simply by sending change logs through a network to a standby database, which then applies the changes to its data.

Database Clustering

This function addresses the biggest issues of high availability and high performance for any enterprise DB. To achieve this, Tibero DB implements a technology called Tibero Active Cluster.

Database clustering allows multiple database instances to share a database with a shared disk. It is important that clustering maintains consistency among the instances' internal database caches. This is also implemented in Tibero Active Cluster.

Parallel Query Processing

Data volumes for businesses are continually increasing. For this reason, it is necessary to have parallel processing technology which enables maximum usage of server resources for massive data processing.

To meet these needs, Tibero supports transaction parallel processing functions optimized for Online Transaction Processing (OLTP) and SQL parallel processing functions adapted for Online Analytical Processing (OLAP). This improves query response time to support quick decision making for business.

Query Optimizer

The query optimizer decides the most efficient plan by considering various data handling methods based on statistics for the schema objects.

Query optimizer performs the following:

Creates various execution plans to process given SQL statements.

Calculates each execution plan's cost by considering statistics for how much data is distributed, tables, indexes, and partitions as well as computer resources such as CPU and memory.

Selects the lowest cost execution plan.

Main features of the query optimizer are as follows:

Purpose of the query optimization

The final purpose of the query optimizer can be changed. The following table shows two examples.

Total processing time

Query optimizer can reduce the time to retrieve all rows by using the ALL_ROWS hint.

Initial response time

Query optimizer can reduce the time to retrieve the first row by using the FIRST_ROWS hint.

Query transformation

A query can be transformed to make a better execution plan. The following are examples of query transformation: merging views, unnesting subqueries, and using materialized views.

Determining a data access method

Retrieving data from a database can be performed through a variety of methods such as a full table scan, an index scan, or a rowid scan. Because each method has different benefits depending on the amount of data and the filtering type, the best data access method may vary.

Determining a join handling method

When joining data from multiple tables, the order in which to join the tables and a join method such as a nested loop join, merge join, and hash join must be determined. The order and method have a large effect on performance.

Cost estimation

The cost of each execution plan is estimated based on statistics such as predicate selectivity and the number of data rows.

Basic Properties

Tibero guarantees reliable and consistent database transactions, which are logical sets of SQL statements, by supporting the following four properties.

Atomicity

Each transaction is all or nothing; all results of a transaction are applied or nothing is applied. To accomplish this, Tibero uses undo data.

Consistency

Every transaction complies with rules defined in the database regardless of its result, and the database is always consistent. There are many reasons why a transaction could break a database's consistency. For example, an inconsistency occurs when a table and an index have different data. To deal with this problem, Tibero prevents only a part of a transaction from being applied. Therefore, even if a table has been modified and a related index has not, other transactions can see the unmodified data. The other transactions assumes that there is consistency between the table and the index.

Isolation

A transaction is not interrupted by another transaction. When a transaction is processing data, other transactions wait to access the data and any operation in another transaction cannot use the data.However, no error occurs even in this case. To accomplish this, Tibero uses two methods: multi-version concurrency control (MVCC) and row-level locking. When reading data, MVCC can be used without interrupting other transactions. When modifying data, row level fine-grained lock controls can be used to minimize conflicts and to make a transaction wait.

Durability

Once a transaction has been committed, it must be permanent even if there is a failure such as a power loss or a breakdown. To accomplish this, Tibero uses redo logs and write-ahead logging. When a transaction is committed, relevant redo logs are written to disk to guarantee the transaction's durability. Before a block is loaded on a disk, the redo logs are loaded first to guarantee the database consistency.

Process Structure

Tibero has a multi-process and multi-thread based architecture, which allows access by a large amount of users.

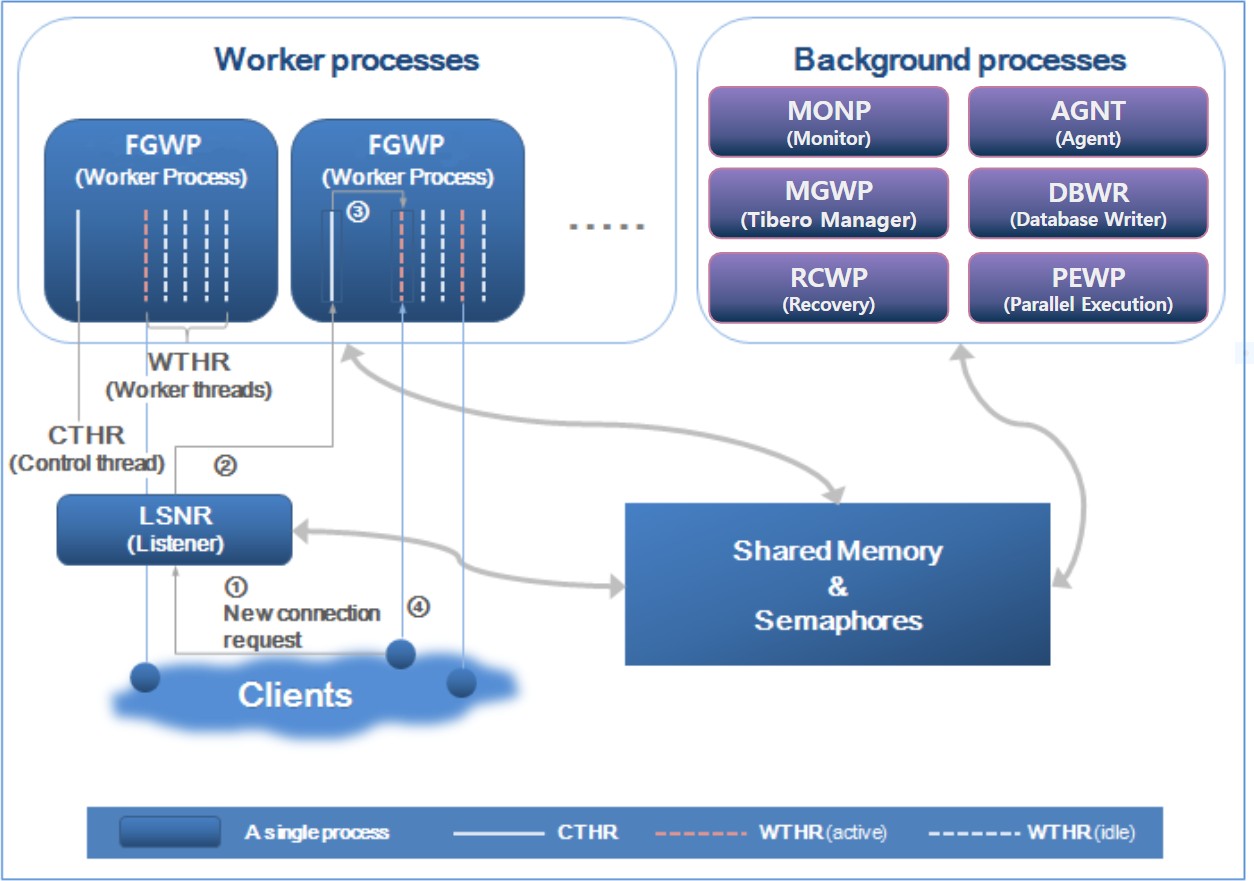

The following figure shows the process structure of Tibero:

Tibero has the following three processes:

Listener

Worker Process or Foreground Process

Background Process

Listener

Listener receives requests for new connections from clients and assigns them to an available worker process. Listener plays an intermediate role between clients and worker process using tblistener, an independent executable file. Starting in Tibero 6, listeners are created by MONP, and they are created again when they are forcibly terminated.

A new connection from a client is processed as follows (refer to [Figure 1]).

Listener searches for a worker process that has an available worker thread and then sends the connection request to the worker process (①).

As the connection is assigned with a file descriptor, the client starts operations as if it has been connected to the worker thread from the start regardless of a server’s internal operation.

A control thread that receives a request from listener checks the status (②) of worker threads included in the same worker process and assigns an available worker thread (③) to the connection from the client.

The assigned worker thread and the client are authenticated before starting a session (④).

Worker Process

A worker process communicates with client processes and handles user requests. Tibero creates multiple working processes when a server starts to support connections from multiple client processes. The number of these processes can be adjusted by setting the initialization parameter WTHR_PROC_CNT. Once Tibero RDBMS starts, this number cannot be modified. You need to set the number properly according to the system environment.

Starting in Tibero 6, worker processes are divided into two groups based on their purpose.

A background worker process performs the batch jobs registered in an internal task or job scheduler, while a foreground worker process performs online requests sent through the listener. The groups can be adjusted using MAX_BG_SESSION_COUNT, an initialization parameter.

For efficient use of resources, Tibero executes tasks by thread. In Tibero, a single worker process contains one control thread and ten worker threads by default.

The number of worker threads per process can be adjusted by setting the initialization parameter WTHR_ PER_PROC. This number also cannot be modified once the Tibero starts up, as with WTHR_PROC_CNT.

Rather than arbitrarily modifying the WTHR_PROC_CNT and WTHR_PER_PROC values, it is recommended to use the maximum session count provided by the server which is set using the MAX_SESSION_COUNT initialization parameter.

The WTHR_PROC_CNT and WTHR_PER_PROC values are automatically set according to the MAX_SESSION_ COUNT value. If you set WTHR_PROC_CNT and WTHR_PER_PROC on your own, the result of multiplying these two values must be equal to the value of MAX_SESSION_COUNT.

MAX_BG_SESSION_COUNT must be smaller than MAX_SESSION_COUNT, and must be a multiple of WTHR_ PER_PROC.

A worker process performs jobs using the given control thread and worker threads.

Control Thread

Each worker process has one control thread, which plays the following roles:

Creates as many worker threads as specified in the initialization parameter when Tibero is started.

Allocates a new client connection to an idle worker thread when requested.

Checks signal processing.

Tibero supports I/O multiplexing and performs the role of sending and receiving messages instead of worker threads.

Worker Thread

A worker thread communicates one-on-one with a client It receives and process messages from a client and returns the result. It processes most DBMS jobs such as SQL parsing and optimization. As a worker thread connects to a single client, the number of clients that can simultaneously connect to Tibero RDBMS is equal to WTHR_PROC_CNT multiplied by WTHR_PER_PROC. Tibero RDBMS does not support session multiplexing, witch means a single client connection represents a single session. Therefore, the maximum number of sessions is also equal to WTHR_PROC_CNT multiplied by WTHR_PER_PROC.

The worker thread does not disappear even if it is disconnected from the client. It is created at startup of Tibero and continues to exist until the database shuts down. In this architecture, creating or deleting threads at each reconnection to the client is not necessary no matter how many reconnection occurs, and therefore the overall system performance can be improved.

However, threads must be created as many as the value specified to the initialization parameter even if the actual number of client is smaller. This continuously consumes the OS's resources, but the proportion of resources required to maintain a single active thread in the overall s

ystem is very insignificant, so the system operation is not affected that much.

Caution

A large number of worker threads concurrently attempting to execute tasks may create an excessive load on the OS, which can significantly reduces the system performance.

When implementing a large-scale system, therefore, it is recommended to configure a 3-Tier architecture by installing middleware between Tibero and the client application.

Background Process

Background processes are independent processes that primarily perform time-consuming disk operations at specified intervals or at the request of a worker thread or another background process.

The following are the processes comprising the background process group:

Monitor Process (MONP)

An independent, fully-fledged process. Starting from Tibero 6, it is no longer a 'thread' but a 'process'. It is the first process created after Tibero starts and also the last process to finish when Tibero terminates.

The monitor thread creates other processes, including a listener when Tibero starts. It also periodically checks each process status and deadlocks.

Tibero Manager Process (MGWP)

A process intended for system management. MGWP receives a manager's connection request and allocates a thread that is reserved for system management. MGWP basically performs the same role as a worker process but it directly handles the connection through a special port. Only the SYS account is allowed to connect to the special port.

Agent Process (AGNT)

A process used to perform internal tasks of TTibero required for system maintenance.

Until version 4SP1, this process stored sequence cache values to disk, but starting in Tibero 5, each worker thread stores them individually. The name "SEQW" was changed to "AGNT" starting in Tibero 6.

In Tibero 6, AGNT runs based on a multi-threaded structure, and threads are allocated per workload.

DataBase Wirte Process (DBWR)

A collection of threads that record database updates on the disk. DBWR includes threads that periodically record user-changed blocks onto disk, threads for recording redo logs onto disk, and check point threads for managing the check point process of the database.

Recovery Worker Process (RCWP)

A process dedicated to recovery and backup operations. During startup, it advances the runlevel subsequent to NOMOUNT mode and performs a recovery by reading redo logs after determining the necessity of a recovery. Executing backup operations with tbrmgr or a media recovery with backup versions is also covered by this process.

Parallel Execution Worker Process (PEWP)

A parallel execution-dedicated process (PEP). When processing PE SQL, one PEP allocates multiple WHTRs in order to maximize the locality. Also, this process is separated from WTHRs for normal client sessions, allowing an easy monitoring and management.

Last updated